Download PDF

Download page Migrate Events Service Data Using the Load Balancer.

Migrate Events Service Data Using the Load Balancer

The time required to migrate from Events Service 4.5.x to 23.x may vary depending on the data volume. Hence, we recommend that you migrate delta data and metadata in multiple iterations to ensure minimal post cutover data on Events Service 23.x.

If your Events Service 4.5.x nodes use DNS, the data migration may delay and result in data loss. The delay period varies depending on the number of agents you have configured.

Before You Begin

Before you use the migration utility:

Download

analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar.- Install Enterprise Console 23.8.0. See Install the Enterprise Console.

- Extract the

events-service.zipfile. - Navigate to

events-service/bin/migrationto use the migration utility jar.

- Install

JDK 17andSQLite 3.xon your machine where you have downloaded the migration utility.SQLitehelps you to monitor the migration status.

Prepare Events Service 4.5.x Data for Migration

Go to

$APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.ymland enable the following property in the Events Service 4.5.x cluster:ad.es.node.http.enabled=trueCODERepeat this step on each node of the Events Service 4.5.x cluster.

- Restart the Events Service 4.5 nodes from Enterprise Console.

Disable the job compaction for

INDEX_COMPACTION_PARENT_JOBon Events Service 23.x.curl -H 'Content-type: application/json' -XPOST 'http://<ELB_hostname_of_Events_Service_23.x>:<ElasticSearch_Port>/job_framework_job_details_v1/_update_by_query' -d '{ "query": { "term": { "name": "INDEX_COMPACTION_PARENT_JOB" } }, "script": { "inline": "ctx._source.jobDataMap.enabled = false", "lang": "painless" } }'CODEYou can find the HTTP port of Elasticsearch from the

ad.es.node.http.portproperty in$APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.propertiesfile for the corresponding Events Service instance.- In Events Service 23.x, edit the configuration file,

$APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.ymlto add the propertyreindex.remote.whitelistwith the comma separated list. This list consists of:- Load Balancer Host Name and port of Elasticsearch 2.x.

Load Balancer Host Name and port of Elasticsearch 8.x.

Syntax to Update reindex.remote.whitelist

- className: com.appdynamics.analytics.processor.elasticsearch.configuration.ElasticsearchConfigManagerModule properties: nodeSettings: cluster.name: ${ad.es.cluster.name} reindex.remote.whitelist: "<ELB_hostname_of_Events_Service_4.5.x>:<Port>,<ELB_hostname_of_Events_Service_23.x>:<Port>,<node1_hostname_of_Events_Service_4.5.x>:<Port>,<node2_hostname_of_Events_Service_4.5.x>:<Port>"CODEExample

reindex.remote.whitelist: "es2.elb.amazonaws.com:9200,es8.elb.amazonaws.com:9200,es2node1.amazonaws.com:9200,es2node2.amazonaws.com:9200,10.20.30.40:9200,10.20.30.41:9200,10.20.30.42:9200"CODE

Create the

application.ymlfile in the folder where you have downloaded the migration utility:Folder Structure

/home/username/ - analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar - config - application.ymlCODEExample: application.yml

es: # Target Elasticsearch hostname and port for the data migration #(Get this from $APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.properties ad.es.node.http.port from events-service 23.x) targetHostName: es8.elb.amazonaws.com targetPort: 9200 # Source Elasticsearch hostname and port for the data migration #(Get this from $APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.properties ad.es.node.http.port from events-service 4.x) sourceHostName: es2.elb.amazonaws.com sourcePort: 9200 # Maximum number of retries per index during the migration maxRetriesPerIndex: 3 # Delay in milliseconds between each retry attempt delayBetweenRetry: 2000 # Set this to true if you want to use an external version for iteration 2 and subsequent, # or false if you want to use an internal version for migration. useExternalVersion: true clusters: source: api: keys: # Controller API key for the source Elasticsearch v2.x cluster #(Get this from $APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.properties ad.accountmanager.key.controller from events-service 4.x) CONTROLLER: 12a12a12-12aa-12aa-a123-123a12a1a123 # OPS API key for the source Elasticsearch v2.x cluster #(Get this from $APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.properties ad.accountmanager.key.ops from events-service 4.x) OPS: "a1a12ab1-a12a-1a12-123a-a1234a12a123" # API hostname and port of the source events-service cluster # Get the port from the events-service-api-store.properties file ad.dw.http.port from events-service 4.x api: https://events-service.elb.amazonaws.com:9080 # Provide the absolute path of the certificate (if needed) for the source events-service cluster cert: "/home/username/wildcart.crt" # Set this to true if you want to verify the hostname when creating the SSL context # or false to skip the hostname verification. check: false elasticsearch: # URL and internal URL of the source Elasticsearch v2.x cluster #(Get this from $APPDYNAMICS_HOME/appdynamics/platform/events-service/processor/conf/events-service-api-store.properties ad.es.node.http.port from events-service 4.x) url: http://es2.elb.amazonaws.com:9200 internal: http://es2.elb.amazonaws.com:9200 # Elasticsearch version of the source cluster (v2.x in this case) version: 2 # Protocol to connect to the source Elasticsearch v2.x cluster (http) protocol: http destination: api: keys: # Controller API key for the destination Elasticsearch v8.x cluster # Get this from $APPDYNAMICS_HOME/appdynamics/platform/events-service/processor/conf/events-service-api-store.properties ad.accountmanager.key.controller from events-service 23.x CONTROLLER: 12a12a12-12aa-12aa-a123-123a12a1a123 # OPS API key for the destination Elasticsearch v8.x cluster # Get this from $APPDYNAMICS_HOME/platform/events-service/processor/conf/events-service-api-store.properties ad.accountmanager.key.ops from events-service 23.x OPS: "a1a12ab1-a12a-1a12-123a-a1234a12a123" # API hostname and port of the destination events-service cluster (not Elasticsearch v8.x cluster) # Get the port from the events-service-api-store.properties file ad.dw.http.port from events-service 4.x api: "https://events-service.elb.amazonaws.com:9080" # Provide the absolute path of the certificate (if needed) for the destination events-service cluster cert: "/home/username/wildcart.crt" # Set this to true if you want to verify the hostname when creating the SSL context # or false to skip the hostname verification. check: true elasticsearch: # URL and internal URL of the destination Elasticsearch v8.x cluster url: http://es8.elb.amazonaws.com:9200 internal: http://es8.elb.amazonaws.com:9200 # Elasticsearch version of the destination cluster (v8.x in this case) version: 8 # Protocol to connect to the destination Elasticsearch v8.x cluster (http or https). If elasticsearch is SSL/TLS enabled, then use https scheme. protocol: http # Provide the absolute path of the .cer file. # (needed if https is enabled) trustStoreFile: "/home/username/client-ca.cer" # Elasticsearch username and password (if required) for the destination v8.x cluster # If the protocol is https, this is mandatory elasticsearchUsername: "elastic" elasticsearchPassword: "test123" migration: # Number of search hits per request during migration, search_hits: 5000 # Number of concurrent reindex requests during migration (based on CPU cores), maximum value recommended is 8. reindex_concurrency: 4 # Batch size for scrolling through search results during migration, reduce this if you hit the exception : Remote responded with chunk size reindex_scroll_batch_size: 5000 # Number of reindex requests per second during migration reindex_requests_per_second: 8000 # Threshold size in bytes for large rollovers during migration (60GB in this case). This value determines when the index rollover should occur depending on the anticipated ingestion speed and migration duration. # For example, if the migration tool is utilizing 4 reindex_concurrency threads and the migration takes 15 hours to complete on a 1 TB setup, and if the average ingestion speed is 3 GB per hour, then the index is expected to grow to approximately 45 GB by the end of the migration. # Set the value of "large_rollover_threshold_size_bytes" to 60 GB in this scenario. If any index exceeds 60 GB during migration, the migration tool will trigger a rollover. large_rollover_threshold_size_bytes: 60000000000 # Threshold size in bytes for small rollovers during migration (20GB in this case). This value determines when the index rollover should occur depending on the anticipated ingestion speed and migration duration. # For example, if the migration tool is utilizing 4 reindex_concurrency threads and the migration takes 15 hours to complete on a 1 TB setup, and if the average ingestion speed is 1 GB per hour, then the index is expected to grow to approximately 15 GB by the end of the migration. # set the value of "small_rollover_threshold_size_bytes" to 20 GB in this scenario. If any index exceeds 20 GB during migration, the migration tool will trigger a rollover. small_rollover_threshold_size_bytes: 20000000000 # Required low disk size watermark during migration (85% in this case) required_low_disk_size_watermark: 85 #SQLite3 configuration for status monitoring spring: jpa: properties: hibernate: # Hibernate dialect for SQLite database dialect: org.hibernate.community.dialect.SQLiteDialectYML

You choose any of the following strategies depending on your requirements:

Migrate Events Service Data Using the Cutover Strategy

The migration utility first migrates the existing Events Service data from Events Service 4.5.x to 23.x cluster. When any data or metadata is collected in the Events Service 4.5.x cluster during migration, the migration utility does the following:

- Uses the timestamp to identify the delta data or metadata

- Copies the delta to the Events Service 23.x cluster.

- Ensures that new data and metadata flows to the Events Service 23.x cluster.

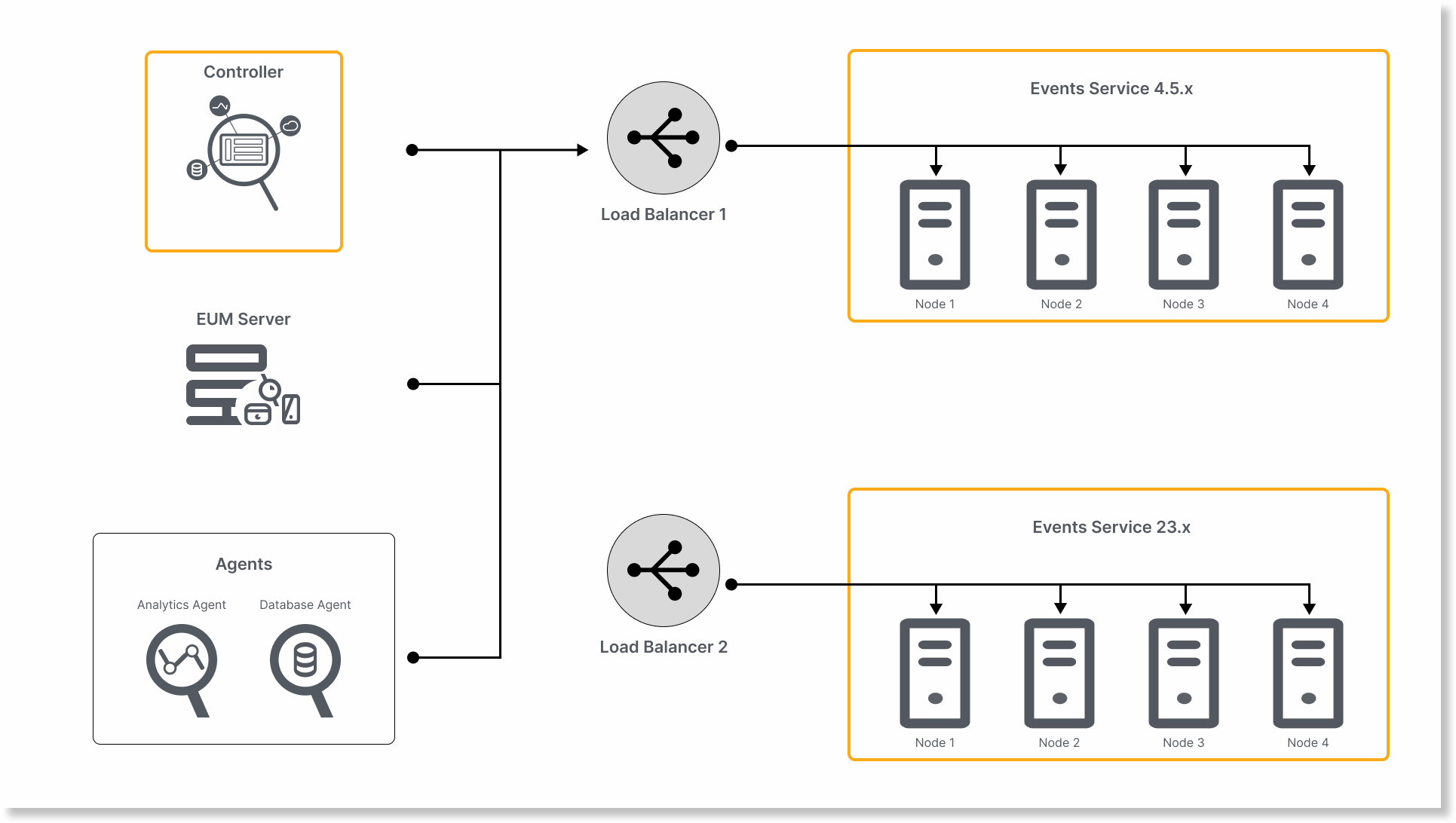

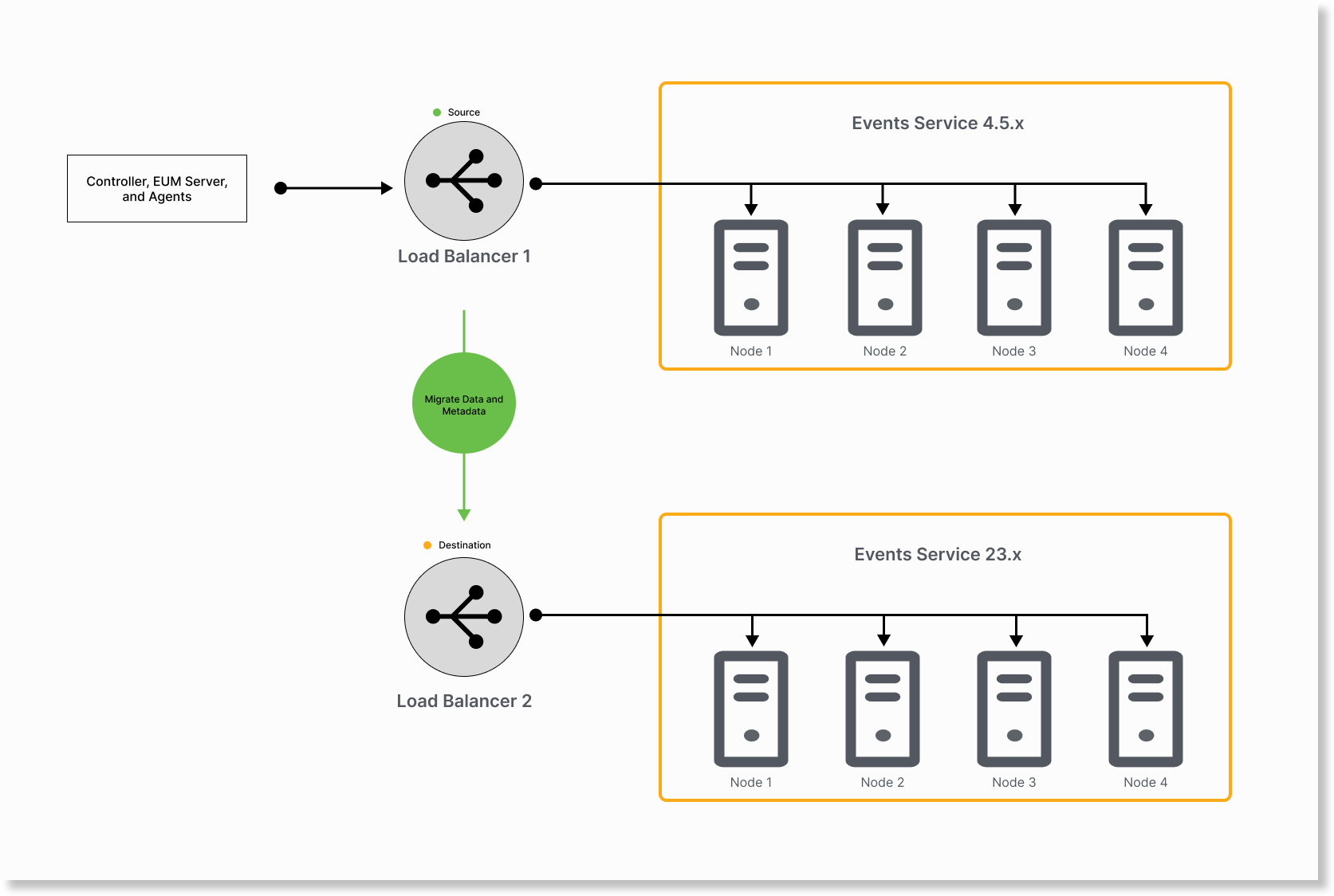

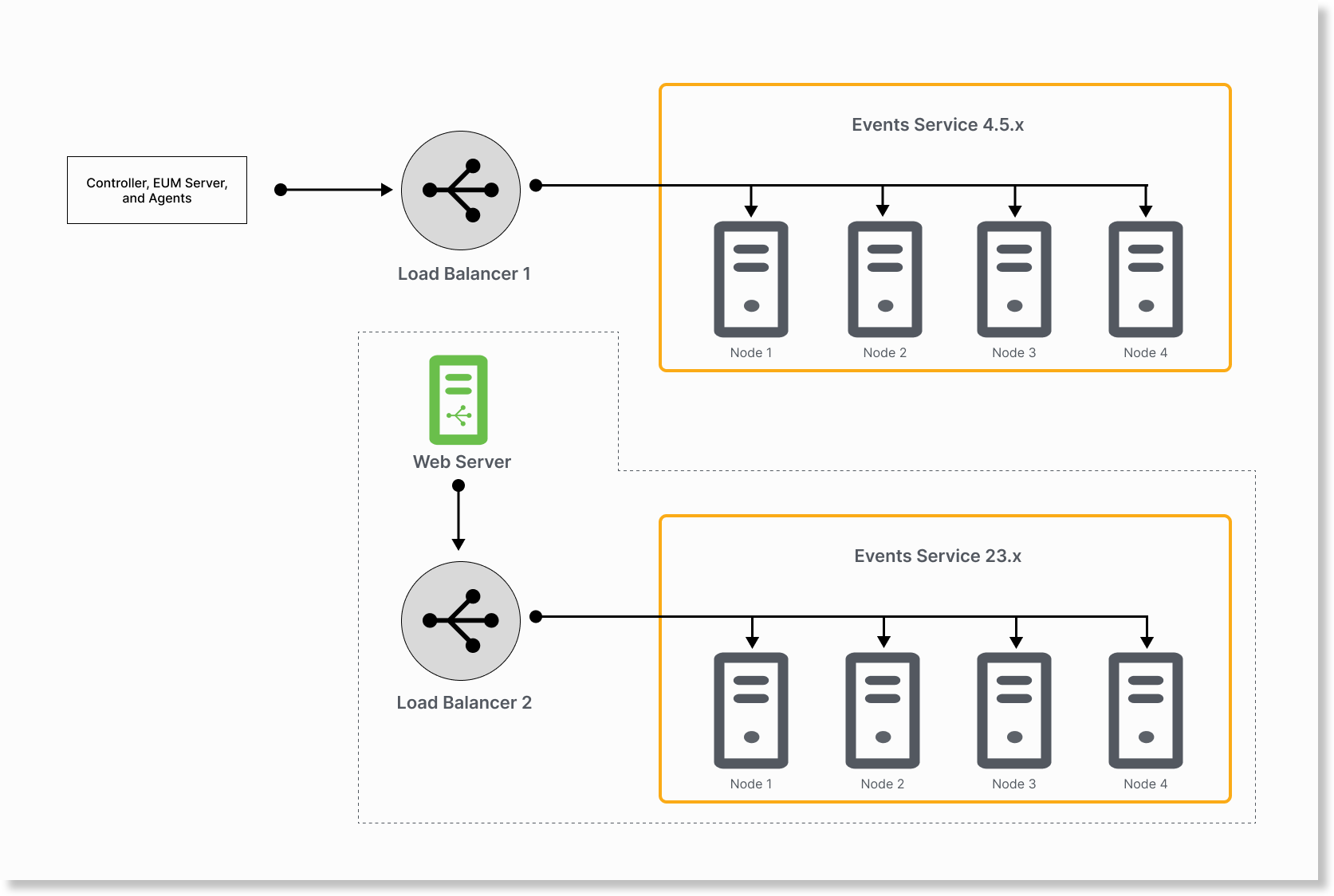

The migration requires two load balancers that are configured as follows:

- Load balancer 1 (LB1) points to Events Service 4.5.x nodes.

- Load balancer 2 (LB2) points to Events Service 23.x nodes.

- Controller, EUM, and Agents point to LB1.

The cutover strategy does not provide a rollback option. If you require a rollback option after migration, see Migrate Events Service Data with a Rollback Option.

You can use one of the following migration approaches based on your requirement:

- Approach 1 - Use Two Load Balancers with Simple Cutover Steps (Recommended) —This approach requires you to specify IP address of the Events Service 4.5.x node to migrate data after cutover.

- Approach 2 - Use Two Load Balancers with Detailed Cutover Steps — This approach requires you to specify IP address of LB1 and LB2 to migrate data after cutover. So, you must add the Events Service 4.5.x cluster to the load balancer after cutover.

Approach 1 - Use Two Load Balancers with Simple Cutover Steps (Recommended)

This approach requires two load balancers. After cutover, it requires you to specify IP address of the Events Service 4.5.x node to migrate data.

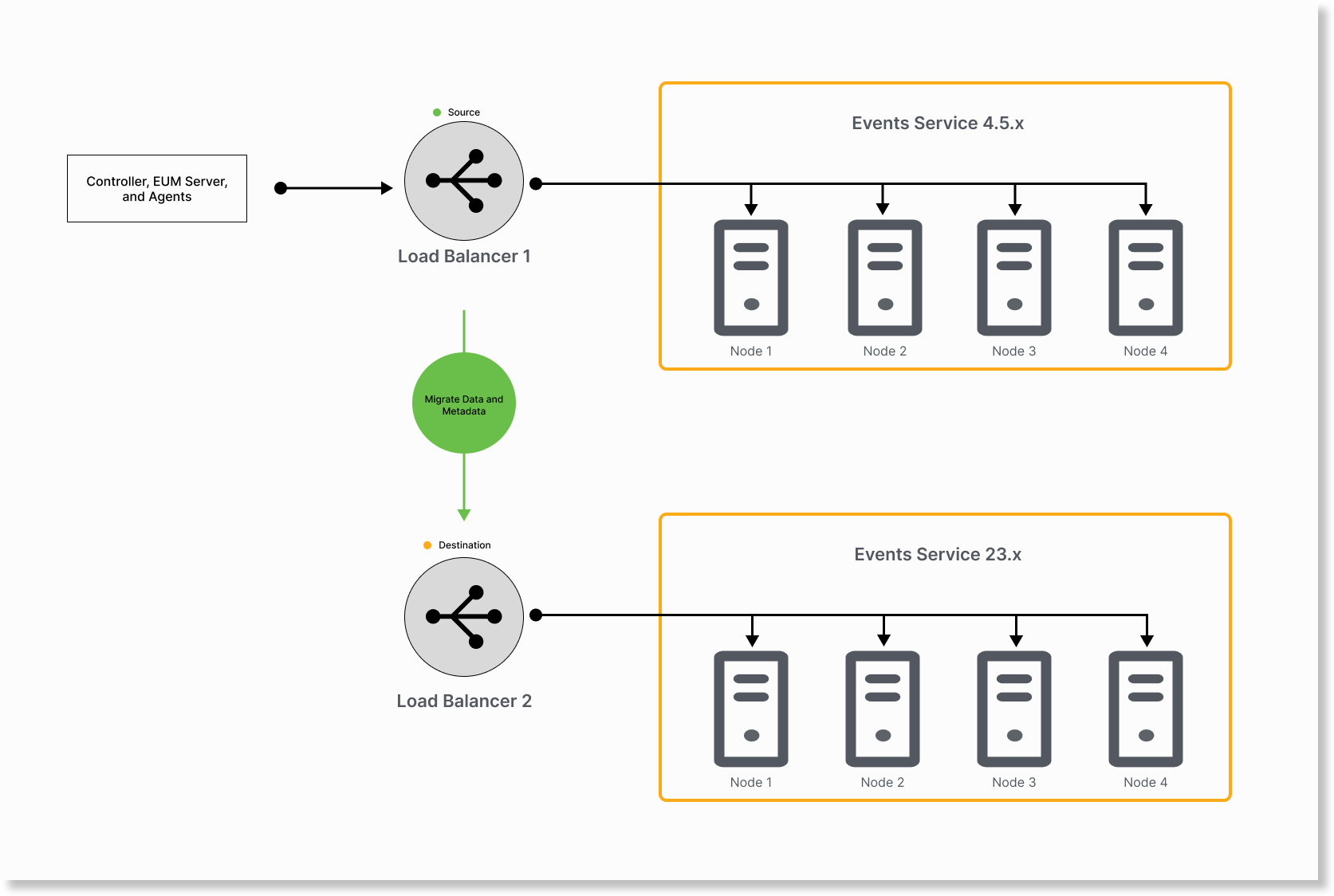

- Run the migration utility by keeping LB1 as the source and LB2 as the destination.

Rollover the Events Service 4.5.x indices.

nohup java -jar analytics-on-prem-es2-es8-migration-23.7.0-243-exec.jar rollover > rollover_output.log 2>&1 &CODEMigrate data and metadata from Events Service 4.5.x to 23.x.

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar data > data_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar metadata > metadata_output.log 2>&1 &CODE

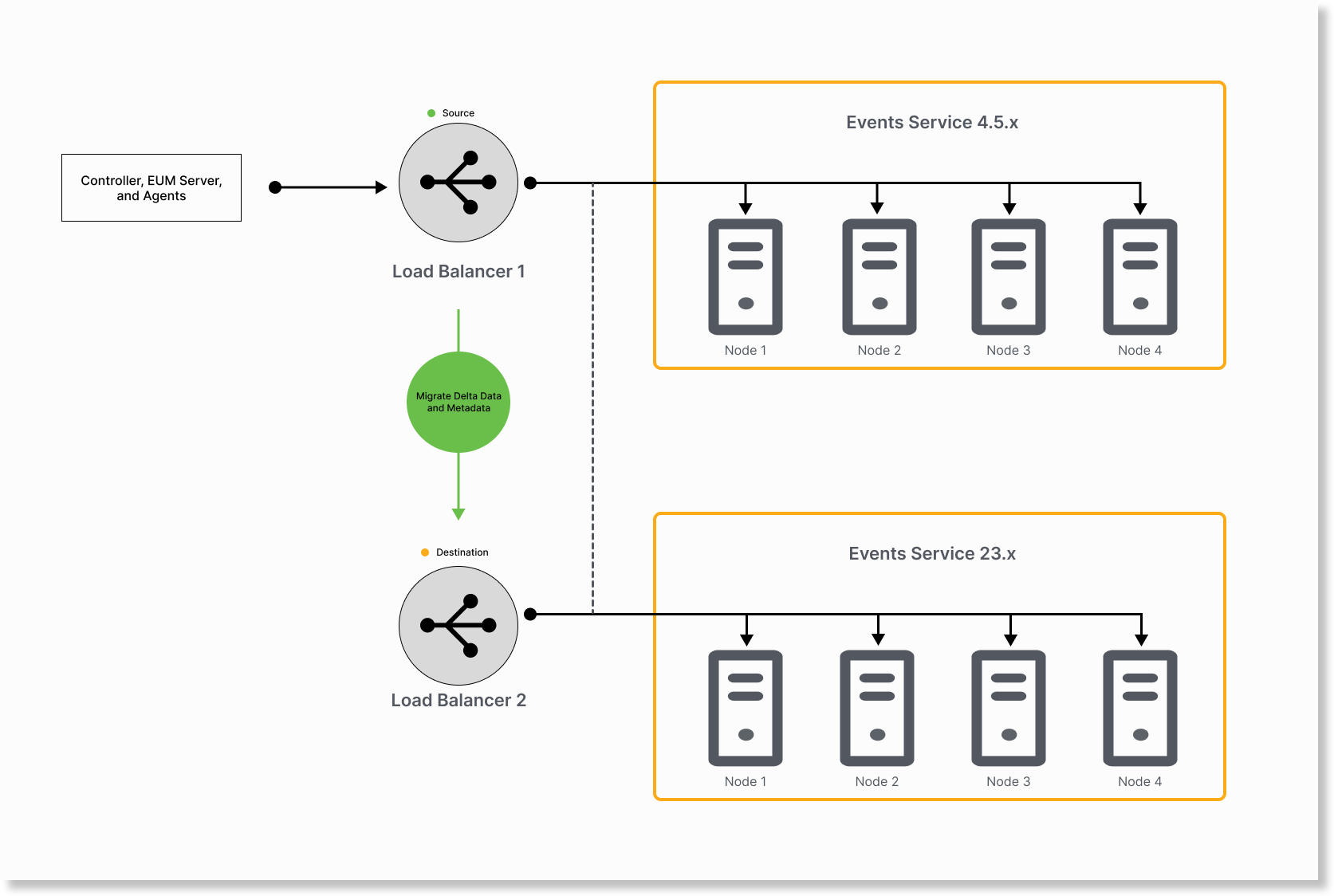

Migrate delta from Events Service 4.5.x to 23.x nodes:

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar data > delta_data_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar metadata > delta_metadata_output.log 2>&1 &CODE

- We recommend that you repeat this step until delta in Events Service 4.5.x is minimum. Use logs to determine how much time each iteration takes to migrate the delta. If the total time taken for the iteration is significantly lesser than the previous iterations, it indicates that the delta in Events Service 4.5.x cluster has reduced. You can stop the iterations if the last two iterations take nearly the same time to migrate.

For example, if the iteration takes 30 seconds where the previous iteration also took nearly 30 seconds, you can consider that the delta is at minimum. - Reduce the time gap between delta migration and post cutover migration. It ensures the data to migrate after cutover is minimum.

Example Log Excerpt

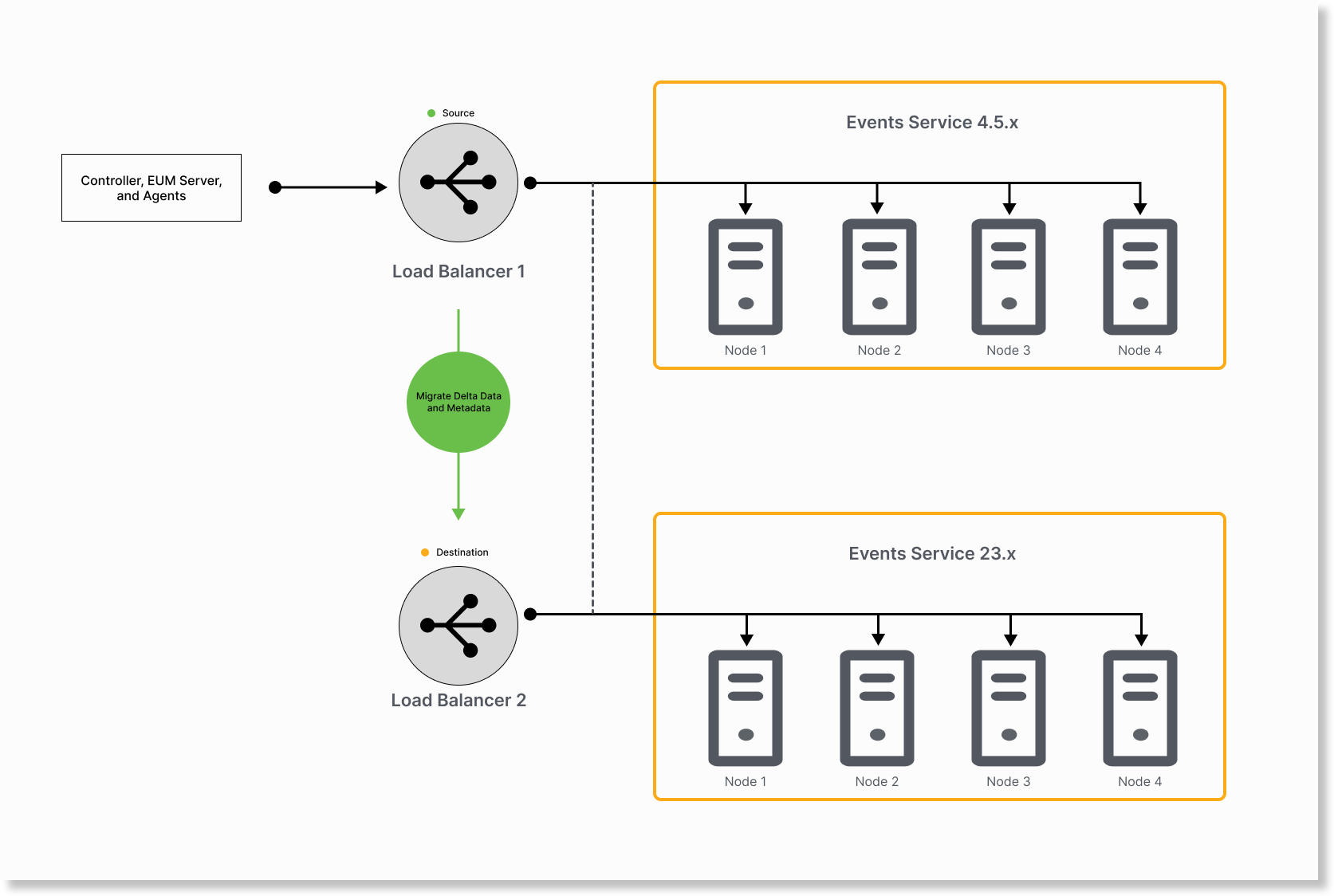

2023-08-09T21:52:43.116+05:30 INFO 87453 --- [ main] c.a.analytics.onprem.MigrationTool : Total time taken: 30 secondsCODEWhen the delta in your Events Service 4.5.x cluster is minimal, you can add Events Service 23.x nodes to LB1. This starts the cutover phase.

You may lose some data if you have active sessions or transactions.

- We recommend that you repeat this step until delta in Events Service 4.5.x is minimum. Use logs to determine how much time each iteration takes to migrate the delta. If the total time taken for the iteration is significantly lesser than the previous iterations, it indicates that the delta in Events Service 4.5.x cluster has reduced. You can stop the iterations if the last two iterations take nearly the same time to migrate.

Add Events Service 23.x nodes to LB1. During this time, the data can flow to Events Service 23.x or 4.5.x nodes.

Remove Events Service 4.5.x nodes from LB1 and end the cutover. Therefore, the data flows only to Events Service 23.x nodes.

Run the following commands by keeping the host name or IP address of any Events Service 4.5.x nodes as the source and LB1 as the target in the

application.ymlfile.nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar post_cutover_metadata > post_cutover_metadata_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar post_cutover_data > post_cutover_data_output.log 2>&1 &CODE

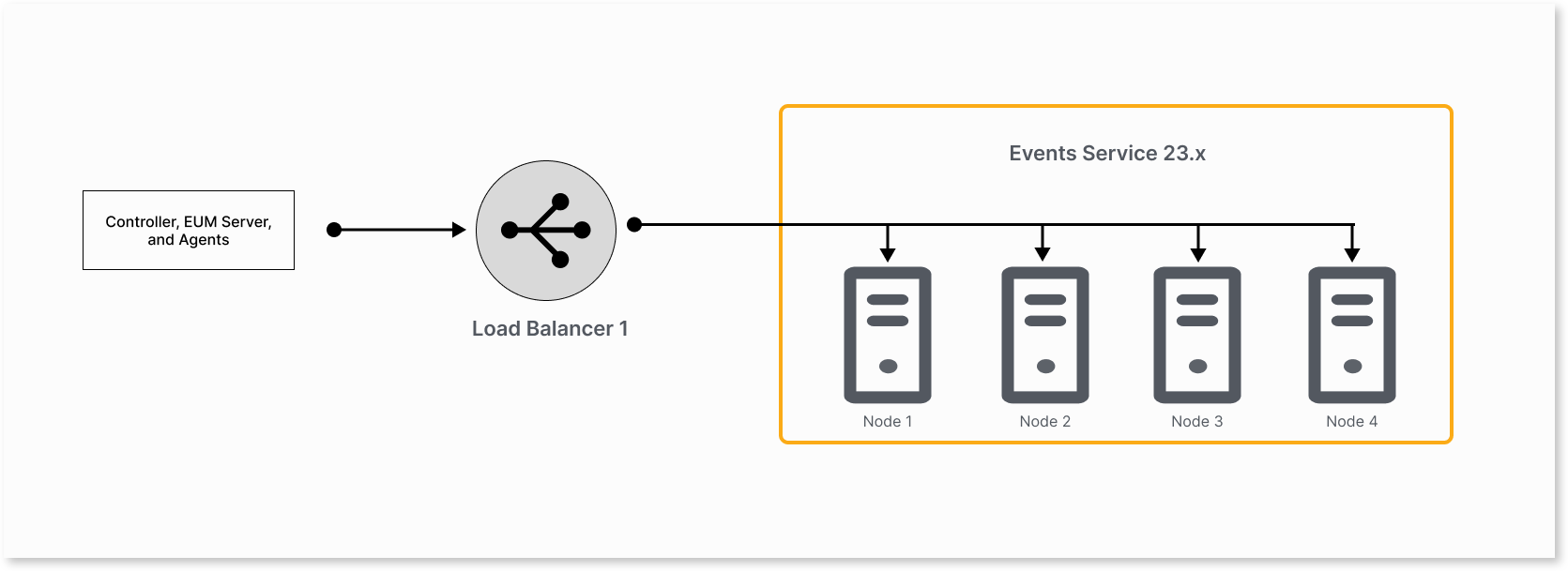

The Events Service 23.x cluster is now synchronized.- Verify the Migration status. You may decommission the Events Service 4.5.x cluster if the migration is successful.

We recommend this approach because you are not required to swap load balancers or update the application.yml file after cutover.

Approach 2 - Use Two Load Balancers with Detailed Cutover Steps

This approach requires two load balancers. After cutover, it requires you to specify IP address of LB1 and LB2 to migrate data after cutover.

- Run the migration utility by keeping LB1 as the source and LB2 as the destination.

Rollover the Events Service 4.5.x indices.

nohup java -jar analytics-on-prem-es2-es8-migration-23.7.0-243-exec.jar rollover > rollover_output.log 2>&1 &CODEMigrate data and metadata from Events Service 4.5.x to 23.x.

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar data > data_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar metadata > metadata_output.log 2>&1 &CODE

Migrate delta from Events Service 4.5.x to 23.x nodes:

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar data > delta_data_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar metadata > delta_metadata_output.log 2>&1 &CODE

- We recommend that you repeat this step until delta in Events Service 4.5.x is minimum. Use logs to determine how much time each iteration takes to migrate the delta. If the total time taken for the iteration is significantly lesser than the previous iterations, it indicates that the delta in Events Service 4.5.x cluster has reduced. You can stop the iterations if the last two iterations take nearly the same time to migrate.

For example, if the iteration takes 30 seconds where the previous iteration also took nearly 30 seconds, you can consider that the delta is at the minimum. - Reduce the time gap between delta migration and post cutover migration. It ensures the data to migrate after cutover is minimum.

Example Log Excerpt

2023-08-09T21:52:43.116+05:30 INFO 87453 --- [ main] c.a.analytics.onprem.MigrationTool : Total time taken: 30 secondsCODEWhen the delta in your Events Service 4.5.x cluster is minimal, you can start the cutover phase.

You may lose some data if you have active sessions or transactions.

- We recommend that you repeat this step until delta in Events Service 4.5.x is minimum. Use logs to determine how much time each iteration takes to migrate the delta. If the total time taken for the iteration is significantly lesser than the previous iterations, it indicates that the delta in Events Service 4.5.x cluster has reduced. You can stop the iterations if the last two iterations take nearly the same time to migrate.

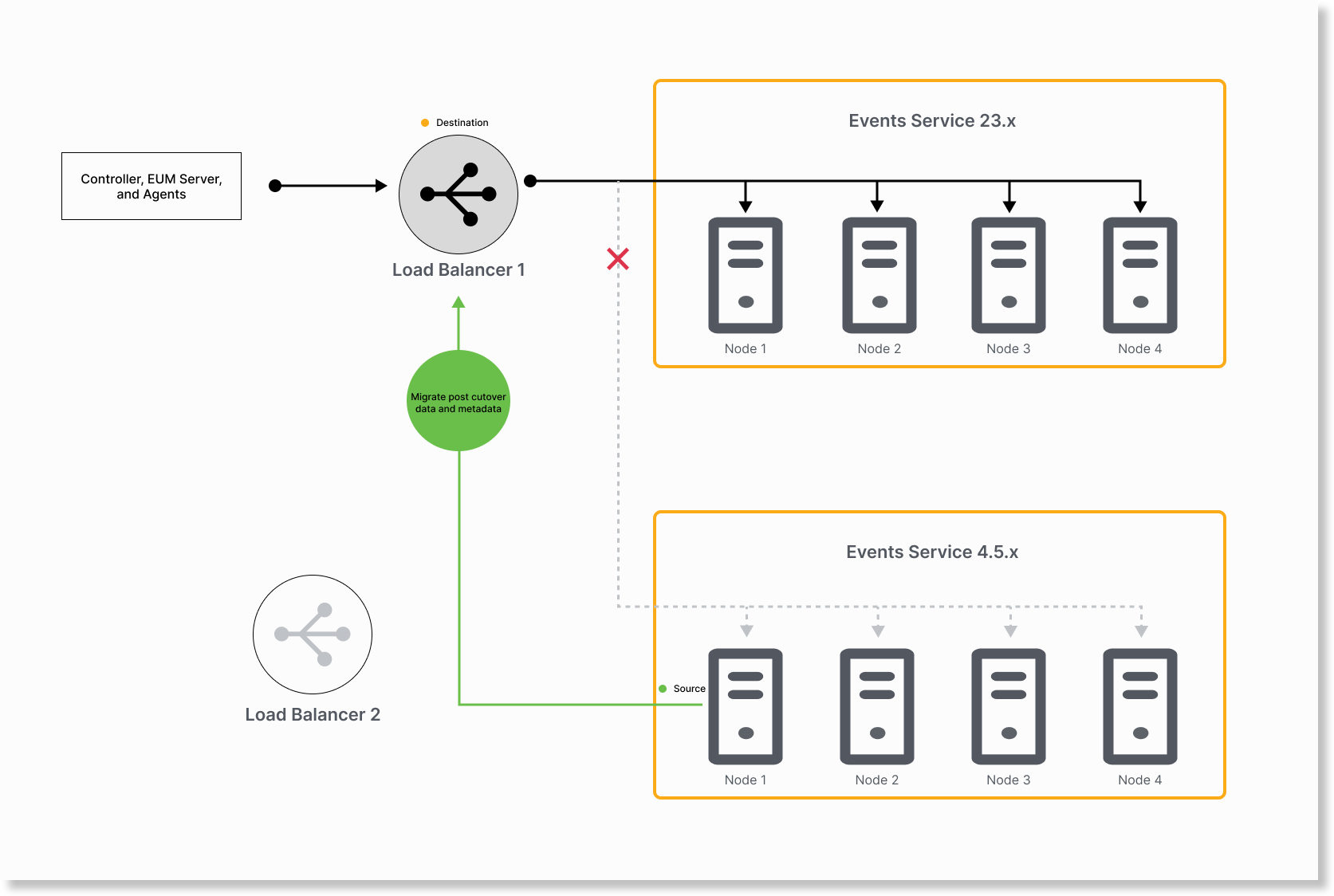

To start the cutover phase, add Events Service 23.x nodes to LB1. During this time, the data can flow to Events Service 23.x or 4.5.x nodes.

Remove Events Service 4.5.x nodes from LB1 and end the cutover. Therefore, the data flows only to Events Service 23.x nodes.

Remove Events Service 23.x nodes from LB2.

Add Events Service 4.5.x nodes to LB2.

Run the following commands by keeping LB2 as the source and LB1 as the target in the

application.ymlfile.

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar post_cutover_metadata > post_cutover_metadata_output.log 2>&1 &

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar post_cutover_data > post_cutover_data_output.log 2>&1 &

The Events Service 23.x cluster is now synchronized. Verify the Migration status and if the migration is successful, you may decommission the Events Service 4.5.x cluster.

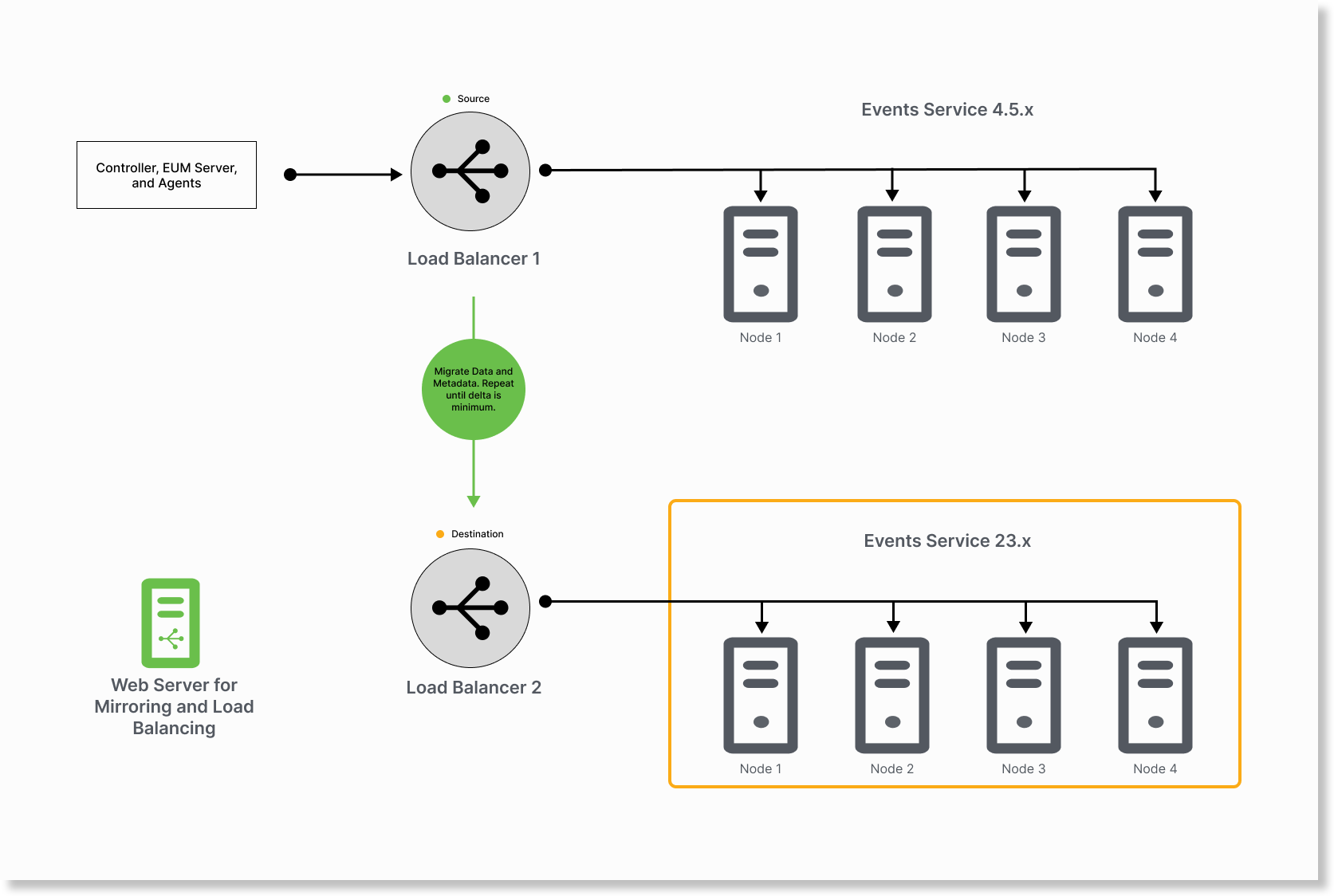

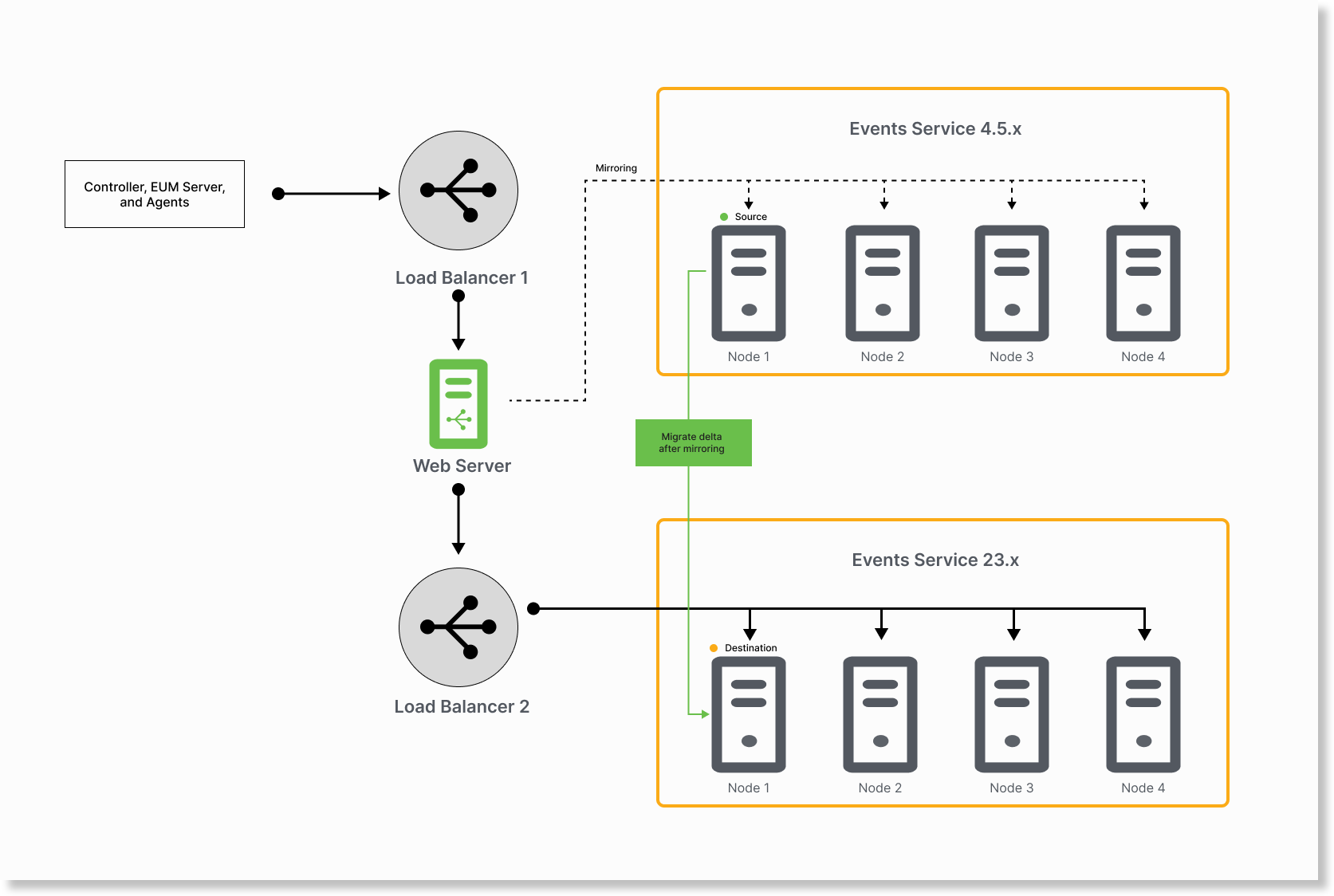

Migrate Events Service Data with a Rollback Option

The cutover strategy does not provide a rollback option. To roll back the migration, you must use the mirroring strategy. This strategy replicates the latest data to the Events Service 4.5.x cluster while ingesting the latest data to Events Service 23.x cluster. Later, you can decide whether to use Events Service 23.x cluster or roll back to the Events Service 4.5.x cluster.

Ensure that you have a web server that can mirror and load balance your traffic between the Events Service clusters. We recommend you to use NGINIX web server with mirroring and load balancing capabilities.

- Run the migration utility by keeping LB1 as the source and LB2 as the destination.

Rollover the Events Service 4.5.x indices.

nohup java -jar analytics-on-prem-es2-es8-migration-23.7.0-243-exec.jar rollover > rollover_output.log 2>&1 &CODEMigrate data and metadata from Events Service 4.5.x to 23.x.

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar data > data_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar metadata > metadata_output.log 2>&1 &CODE

Migrate delta from Events Service 4.5.x to 23.x nodes:

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar data > data_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar metadata > metadata_output.log 2>&1 &JAVA

We recommend that you repeat this step until delta in Events Service 4.5.x is minimum. Use logs to determine how much time each iteration takes to migrate the delta. If the total time taken for the iteration is significantly lesser than the previous iterations, it indicates that the delta in Events Service 4.5.x cluster has reduced. You can stop the iterations if the last two iterations take nearly the same time to migrate.

For example, if the iteration takes 30 seconds where the previous iteration also took nearly 30 seconds, you can consider that the delta is at the minimum.

2023-08-09T21:52:43.116+05:30 INFO 87453 --- [ main] c.a.analytics.onprem.MigrationTool : Total time taken: 30 secondsCODEWhen the delta in your Events Service 4.5.x cluster is minimal, you can configure NGINX for mirroring and load balancing between Events Service clusters.

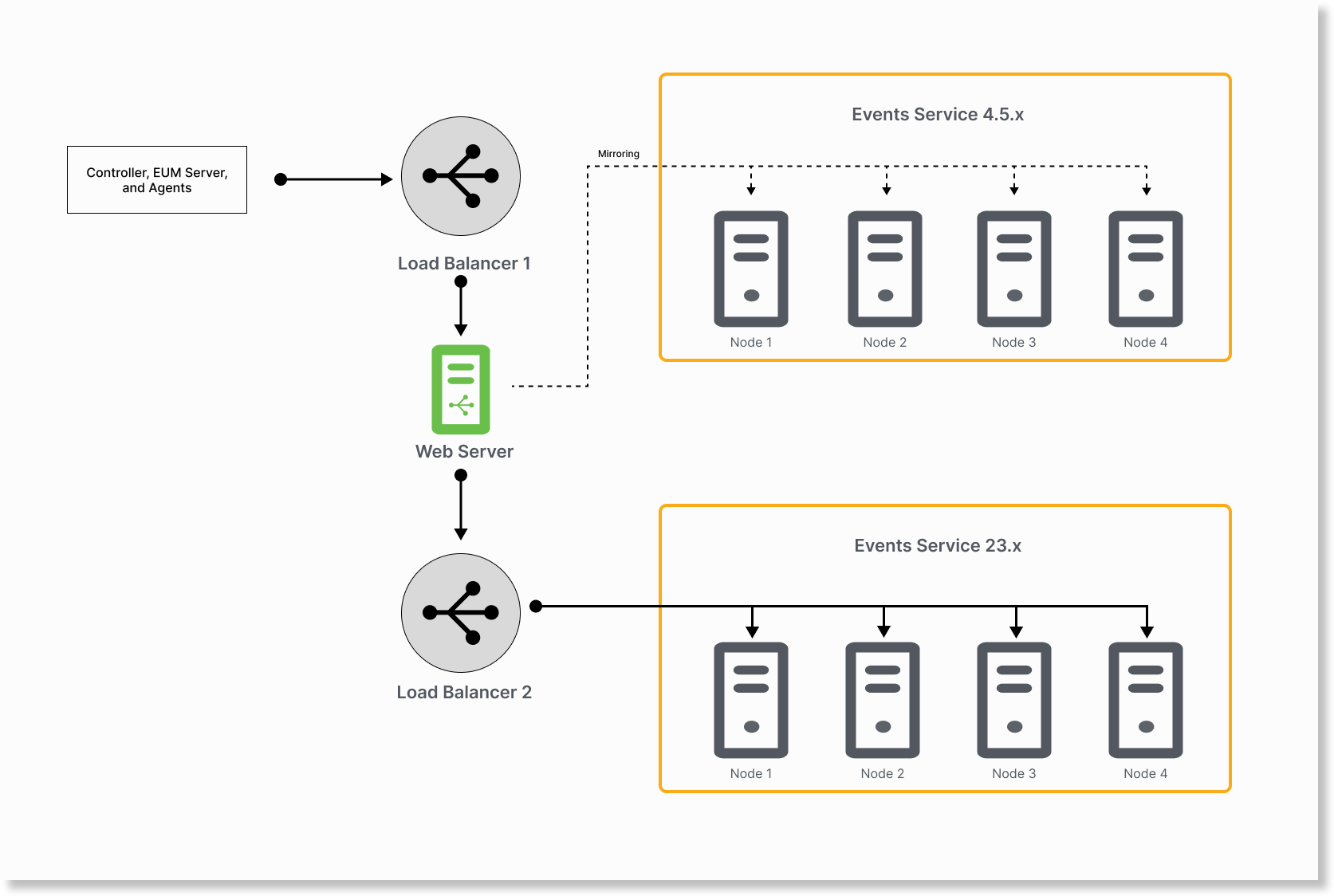

Set up an NGINX web server for mirroring and load balancing.

sudo yum install nginx sudo vi /etc/nginx/conf.d/mirror.confCODENGINX web server points to LB2.

NGINX web server mirrors the data to Events Service 4.5.x.

LB1 points to NGINX web server.

upstream backend { server es8-lb:9080; } upstream test_backend { server es2-node1:9080; server es2-node2:9080; server es2-node3:9080; } upstream backend_9081 { server es8-lb:9081; } upstream test_backend_9081 { server es2-node1:9081; server es2-node2:9081; server es2-node3:9081; } upstream backend_9200 { server es8-lb:9200; } upstream test_backend_9200 { server es2-node1:9200; server es2-node2:9200; server es2-node3:9200; } upstream backend_9300 { server es8-lb:9300; } upstream test_backend_9300 { server es2-node1:9300; server es2-node2:9300; server es2-node3:9300; } server { server_name nginx-instance; listen 9200; location / { mirror /mirror; proxy_pass http://backend_9200; } location = /mirror { internal; proxy_pass http://test_backend_9200$request_uri; } } server { server_name nginx-instance; listen 9300; location / { mirror /mirror; proxy_pass http://backend_9300; } location = /mirror { internal; proxy_pass http://test_backend_9300$request_uri; } } server { server_name nginx-instance; listen 9080; location / { mirror /mirror; proxy_pass http://backend; } location = /mirror { internal; proxy_pass http://test_backend$request_uri; } } server { server_name nginx-instance; listen 9081; location / { mirror /mirror; proxy_pass http://backend_9081; } location = /mirror { internal; proxy_pass http://test_backend_9081$request_uri; } }CODE

Run the following commands by keeping any node in the Events Service 4.5.x cluster as the source and any node in the Events Service 23.x cluster as the target in the

application.ymlfile.Do not use load balancers as the source or target.

nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar post_cutover_metadata > post_cutover_metadata_output.log 2>&1 & nohup java -jar analytics-on-prem-es2-es8-migration-LATESTVERSION-exec.jar post_cutover_data > post_cutover_data_output.log 2>&1 &CODE

If you require to roll back to Events Service 4.5.x, perform the following:

- Add the Events Service 4.5.x cluster to LB1.

- Remove NGINX web server from LB1.

To continue using the Events Service 23.x cluster after migration, perform the following:

- Add the Events Service 23.x cluster to LB1.

- Remove NGINX web server from LB1.

The Events Service 23.x cluster is now synchronized. Verify the Migration status and if the migration is successful, you may decommission the Events Service 4.5.x cluster.