Download PDF

Download page Configure Log Analytics Using Source Rules.

Configure Log Analytics Using Source Rules

The Centralized Log Management UI enables you to configure your log data sources using source rules.

Once you define source rules, you specify which Analytics Agents should use the rules by associating specific source rules with Agent Scopes. Agent Scopes are groups of Analytics Agents that you define. Using Agent Scopes simplifies the deployment of the log source configuration to multiple agents.

Log Analytics Source Rules

Analytics Agents collect logging information based on the log source rules you define in the Controller.

The primary function of a source rule is to specify the location and type of a log file, the pattern for capturing records from the log file, and the structure of the data of captured records. They can also specify field masking or sensitive data removal and manage time zones of captured records.

When the log source rules are enabled and associated with Agent Scopes, Analytics Agents automatically start collecting the configured logs as follows:

- Analytics Agents register with the Controller on startup

- Analytics Agents download log source rules to configure log collection (after registration)

- Log source rules are stored in the Controller data store and are configurable through the Centralized Log Management UI

- Analytics Agents start acting on log source rule changes within five minutes (this could be longer if there are any network communication issues)

Permissions

To configure log analytics source rules, you need the following permissions:

- Manage Centralized Log Config permission

- View access to the specific source types that you want to configure

See Analytics and Data Security.

Analytics Agent Properties

If you are using Centralized Log Management (CLM), you need to configure additional properties in the analytics-agent.properties file. See information on configuring properties for consolidated log management in Install Agent-Side Components for details.

Managing Source Rules

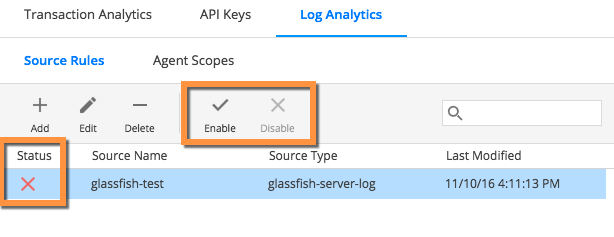

When you create a source rule and save it, the rule is initially disabled. This is shown in the Status column on the source rule list page.

You can enable or disable a source rule by selecting it and choosing the corresponding option from the Source Rules menu, shown in the following screenshot:

You can also see which source rules use a specific agent scope. The Analytics Agent does not collect the log data until the source rule is enabled and assigned to an Agent Scope with active Analytics Agents.

Starting Point for Creating a Source Rule

You can create a source rule from any one of these starting points:

- AppDynamics template. Several templates for common log file formats are available.

- Existing source rule. You can use existing source rules as the starting point for new rules.

- New source rule. Start from scratch when your log file does not match one of the available templates.

Preview Extracted Log Data Using Sample Log Files

To improve validation of data collection and parsing of log messages, you can use a local log file with the Log Analytics Configuration UI to preview the field extraction that you want. Three types of field extraction are available.

- Grok patterns and key-value pair extraction

- Auto extraction using regular expressions

- Manual extraction using regular expressions

Best Practices for Source Rule Design

A few recommendations apply to making source rules.

Use the simplest possible regular expressions and grok matching patterns possible. Do not make excessive use of wildcards or quantifiers as it can slow the responsiveness of the Controller UI. You can view examples of such greedy quantifiers on https://docs.oracle.com/javase/7/docs/api/java/util/regex/Pattern.html.

If a regex pattern (including grok) is taking more than five seconds to match against a logline, extraction and further processing for those fields stop. If this occurs, some fields may be missing for that log line when viewed on the Controller. Other log lines are not impacted; however, this occurrence is often the result of an ineffective or faulty matching pattern in the first place, and processing is likely to take a long time for all log lines. This behavior is applicable to the dynamic Preview screens in the Centralized Log Analytics Configuration page as well.

The Controller limits the size of the records it retrieves to 32 KB by source rule in log analytics. This limit guards against excessive system resource burden, including potential resource burden that might be caused by excessive data collection due to faulty source rule patterns.

Create a Source Rule

- From the Controller top navigation bar, click Analytics.

- From the left navigation panel, click Configuration > Log Analytics.

You see two tabs, one for Source Rules and one for Agent Scopes. - From the Source Rules tab, click + Add .

You see the Add Source Rule panel. - In the Add Source Rule panel, select your starting point for the source rule. Note that you can also use a job file as the starting point for your source rule. See Migrate Log Analytics Job Files to Source Rules.

Use Collection Type to indicate if the source log file resides on the local filesystem or will be collected from a network connection.

Collecting from a network connection is only used for a TCP source rule, which extracts log analytics fields from {{syslog}} messages over TCP. See Collect Log Analytics Data from Syslog Messages.

Use the Browser button to locate and specify a sample log file to preview the results of your configuration. You can also specify a sample file later in the configuration process during the field extraction step.

- Click Next to see the Add Source Configuration page. Some fields may be prepopulated with data if you selected either an AppDynamics template or an existing source rule as your starting point. The four tabs are:

- General

- Field Extraction

- Field Customization

- Agent Mapping

- On the General tab, name your rule, specify its location, timestamp handling, and other general characteristics. See General Configuration.

On the Field Extraction tab, configure the fields that you want to capture from your log file. For more details on the fields on this subtab, see Field Extraction. For a detailed procedure on using Auto Field Extraction, see Field Extraction for Source Rules.

Use the simplest possible regular expressions and grok matching patterns. Do not make excessive use of wildcards or quantifiers as it can slow the responsiveness of the Controller UI. Examples of expensive and greedy quantifiers can be found here https://docs.oracle.com/javase/7/docs/api/java/util/regex/Pattern.html

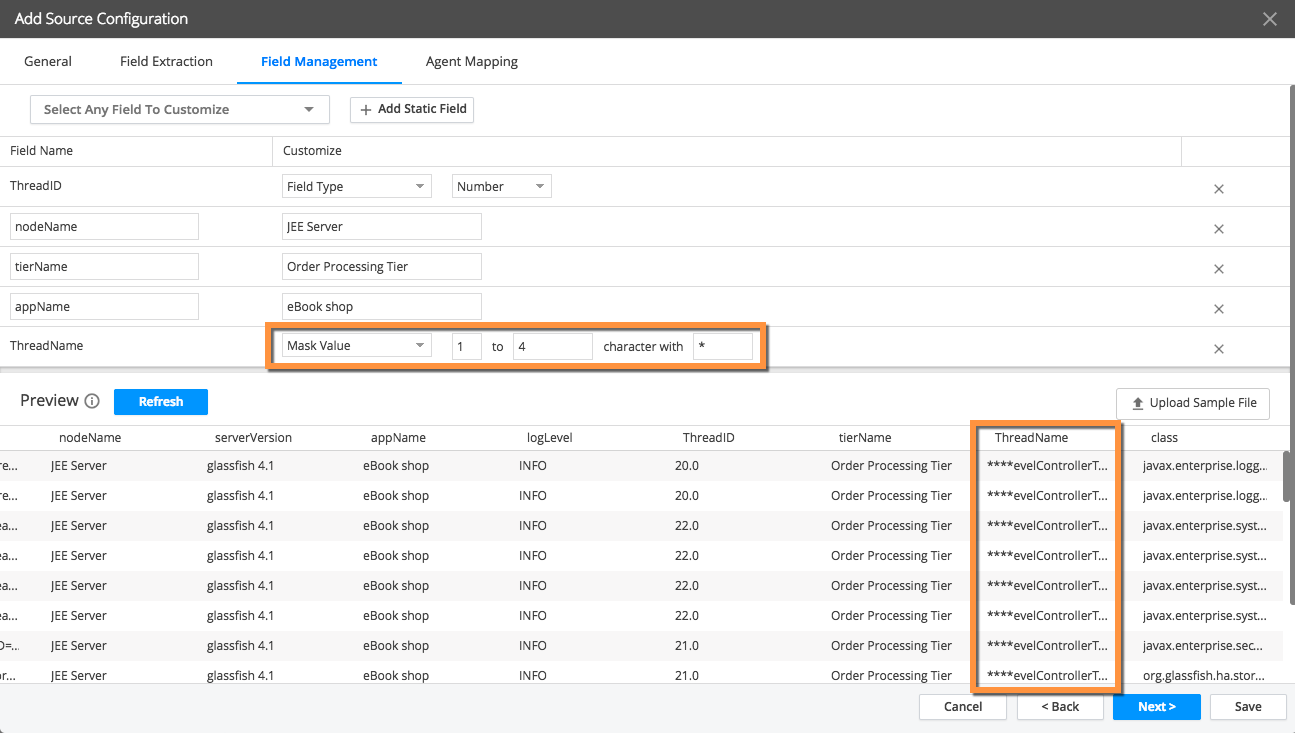

- On the Field Management tab, you can customize the handling of fields in a number of way including masking sensitive data, renaming fields, changing the data type and more. See Field Management.

Mask Value: Use this option to mask values of sensitive data such as credit card numbers or social security numbers. Select Mask Value and enter the starting index and ending index (1 and 4 respectively for the example shown) and a character to use for masking in the display, such as an * asterisk.

Replace Value: Use this option to replace the entire data field with a static string. Select Replace Value and enter the string to use:

- On the Agent Mapping tab, assign the source rule to specific Agent Scopes.

- Click Save when you are finished. The source rule is saved in a disabled state.

Centralized Log Management UI Details

General Configuration Tab

| Field | Description | Required |

|---|---|---|

| Source Name | Name of this source rule. This name must be unique. Appears in the list of defined source rules. | Yes |

| Source Type | Specifies the event type of log source file. This field is prepopulated when you start from an AppDynamics template or an existing source rule. If you are creating a new source rule from scratch, you can specify any value. This value is used to identify this specific type of log event and can be used in searching and filtering the collected log data. The value shows up in the field list for the log data. | Yes |

| Source File | The location and name of the log file to serve as a log source. The location must be on the same machine as the analytics-agent. You can use wild cards and you can specify whether to match files one level deep or all log files in the path directory structure.

| Required when collection type is from the local filesystem. |

| Exclude Files | Exclude, or blocklist, files from the defined source rule(s). Input the relative path of the file(s) to exclude, using wildcards to exclude multiple files.

| No |

| TCP Port | Specifies the port for the analytics-agent to collect log files from a network connection. This field is not present if the collection type is From local file system. If no port number is provided, port 514 is used. Both the syslog utility and analytics-agent should have root access to send logs to port 514 (binding to ports less than 1024 requires root access). | Required when collection type is from a network connection. |

| Enable path extraction | This checkbox enables extraction of fields from the path name. Add a grok pattern in the text box. The syntax for a grok pattern is {%{SYNTAX:SEMANTIC}. For example, to extract | No |

| Start collecting from | Indicate where to begin tailing (collecting the log records). Options are:

| The default is to collect log records from the beginning of the file. |

| Override time zone | Use this to override the time zone for eventTimestamp in log events. If your log events don't have a time zone assigned and you are not overriding it, the host machine time zone is assigned to the log events. You cannot override the timezone in the pickupTimestamp field. | No |

| Override timestamp format | You can override the format if needed. Time zone formats should conform to Joda-Time Available Time Zones, and the timestamp format should be created with the Joda-Time formatting system. | Yes. The override timestamp format is needed to extract out time correctly from the log file. If you do not provide this field, the eventTimestamp will be equal to pickupTimestamp despite log line having a timestamp. |

| Auto-Correct duplicate Timestamps | Enable this option to preserve the order of original log messages if the messages contain duplicate timestamps. This adds a counter to preserve the order. | No |

| Collect Gzip files | Enable this to also find gzip files in the specified path. | No |

Field Extraction Tab

The following table describes the fields and actions on this tab. For a detailed procedure on using Auto or Manual Field Extraction, see Field Extraction for Source Rules.

| Section/Field or Action | Description |

|---|---|

| Add Grok Pattern | |

| Message Pattern | This is the grok pattern used to extract fields from the log message. A pattern may be prepopulated when you use an AppDynamics template or an existing source rule as your starting point. You can add or remove grok patterns as needed. |

| Multiline Format | For log files that include log records that span multiple lines (spanning multiple line breaks), use this field to indicate how the individual records in the log file should be identified. You have two options:

The multiline format is not supported when you are collecting log data from a network connection. |

| Extract Key-Value Pairs | |

| Field | Shows the field selected for key-value pair configuration. |

| Split | The delimiter used to separate the key from its value. In this example, "key=value": the split delimiter is the equal sign "=". Multiple comma-separated values can be added. |

| Separator | The delimiter used to separate out two key-value pairs. In this example, "key1=value1;key2=value2": the separator is the semi-colon ";". Multiple comma-separated values can be added. |

| Trim | A list of characters to remove from the starting and/or the end of the key/value before storing them. In this example, "_ThreadID_": you can specify the underscore "_" as the trim character to result in "ThreadID". Multiple comma-separated values can be added. |

| Include | A list of key names to capture from the "source". You must provide keys in the include field. If the include field is left blank no key-value pairs are collected. |

| Actions | |

| Upload Sample file | Browse to a local file to upload a sample log file. |

| Preview | Use to refresh the Preview grid to see the results of the specified field extraction pattern. |

| Auto Field Extraction | |

| Definer Sample | Select a message from the preview grid that is representative of the fields that you want to extract from the log records. You can select only one definer sample per source rule. |

| Refiner Samples | Refines the regular expression to include values that were not included in the original definer sample. |

| Counter Sample | This specifies something to ignore. Refines the regular expression to exclude values that were included by the original definer or refiner sample. |

| Manual Field Extraction | |

| Regular Expression | Add a regular expression to define the field you want to extract. |

| Field Type | Specify the type for the field. |

| Field Name | Automatically generated within the regular expression pattern. This name appears in the Fields list in the Analytics Search UI. |

| Actions | |

| Add Field | Use to add additional regular expressions for extracting more fields. You cannot add more than 20 fields. |

Preview

| Use these buttons to filter the viewable results in the preview grid. |

| Upload Sample File | Upload a sample file from your local file system to use in the preview grid. |

Field Management Tab

| Field/Action | Description |

|---|---|

| Select Any Field to Customize | Select the field to add customizations. You can add multiple customizations to a single field. |

| Field Name | This column lists the fields that have customizations. |

| Customize | This column shows the specific customizations. For static fields, the display name is shown. Static fields cannot be further customized. |

| Mask Value | This customization option masks values in the collected data. Specify the starting and ending position in the data and the character to use as the masking value. |

| Replace Value | This customization option replaces the entire value of the field with a static string. |

| Rename | This customization option enables you to rename a field to a more recognizable display name. |

| Field Type | Use to change the data type of the field. For example, from string to number. Available types are String, Boolean, and Number. Note that after a source rule has been saved with a field of a specified data type, you cannot later change the data type of that field. Once the fields are indexed in the analytics database trying to specify a new type will cause a validation error. |

| Remove | This customization turns off data collection for the field. It can be reversed at a later time. |

| Add Static Field | This action allows you to add a static field to all the log events collected from this source. This field can then be used to search and filter the log data. For example, use this to add Tier, Node, and Application Name to the log data. |